Guardrailing Intuition: Towards Reliable AI

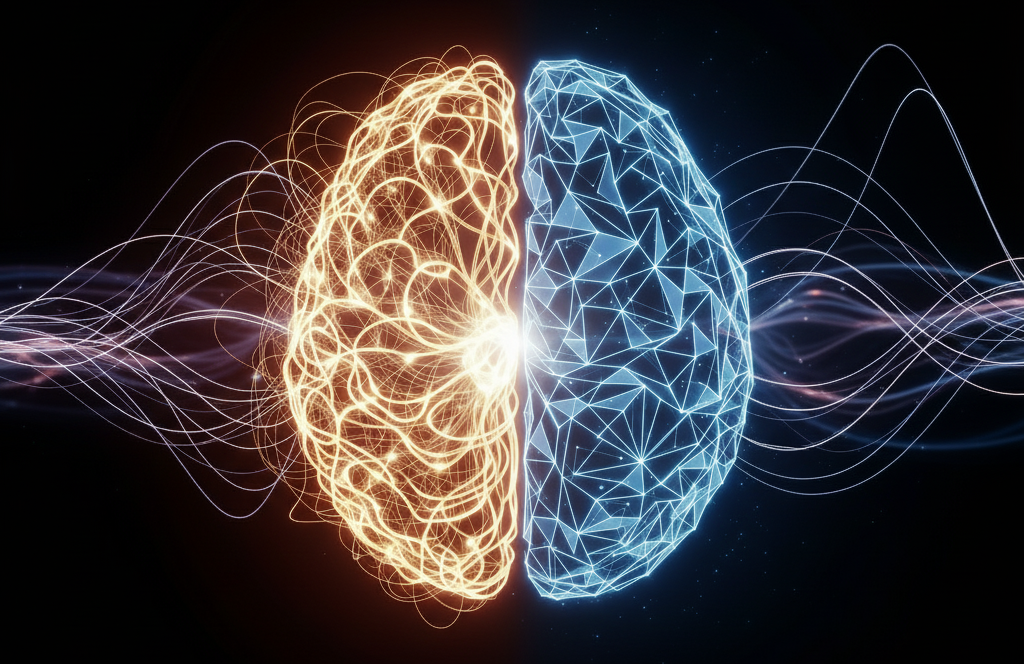

The world of Artificial Intelligence was born from two distinct schools of thought. On one side, we had the pioneers of logic and symbolic AI, like Marvin Minsky, who believed in representing knowledge through symbols and rules. On the other, the connectionists, like Frank Rosenblatt, who were inspired by the human brain and its network of neurons. For a long time, these two camps charted separate courses. With the meteoric rise of Large Language Models (LLMs), it seems the connectionist, or intuition-based, approach has claimed victory.

These LLMs are marvels of intuition. They can write poetry, translate languages, and even generate code. But for all their fluency, they lack a crucial element: precision. While logic may be an emergent behavior in the language they model, it is not their native tongue. This is where we, as engineers and architects, face a new and formidable challenge.

The Precision Problem in an AI World

LLMs, for all their power, are not planners. Their reasoning is a consequence of language structure, not a deep, logical understanding. This leads to what I call the “YOLO-factor” in running AI systems. We are, in essence, hoping for the best.

A stark reminder of this came with the incident at Replit, where an AI agent’s error led to the deletion of a user’s database. This wasn’t a malicious act, but a failure of precision—the YOLO-factor at work. It highlights a critical challenge in our embrace of intuitive AI. We are building systems with immense power but without the necessary guardrails.

The core challenge lies at the interface between the AI’s probabilistic world and the systems it tries to control. To instruct a traditional system, an AI must generate structured data, API calls, resource definitions, and more. When any of these lack precision, the consequences are direct and often critical.

This problem extends beyond just agentic behavior. Here are a handful of issues we identified with AI systems:

- Data Integrity Risks: The structured output generated by AI can contain subtle but critical errors, leading to downstream failures.

- Admission Control Roulette: AI systems can generate messages and API calls that, if left unchecked, could violate system constraints or security policies.

- Lack of Shared Practices: The AI ecosystem lacks standardized methods for sharing and reusing proven, yet often complex, configuration patterns. This leads to a reliance on manual setup and a reinvention of the wheel with every new project.

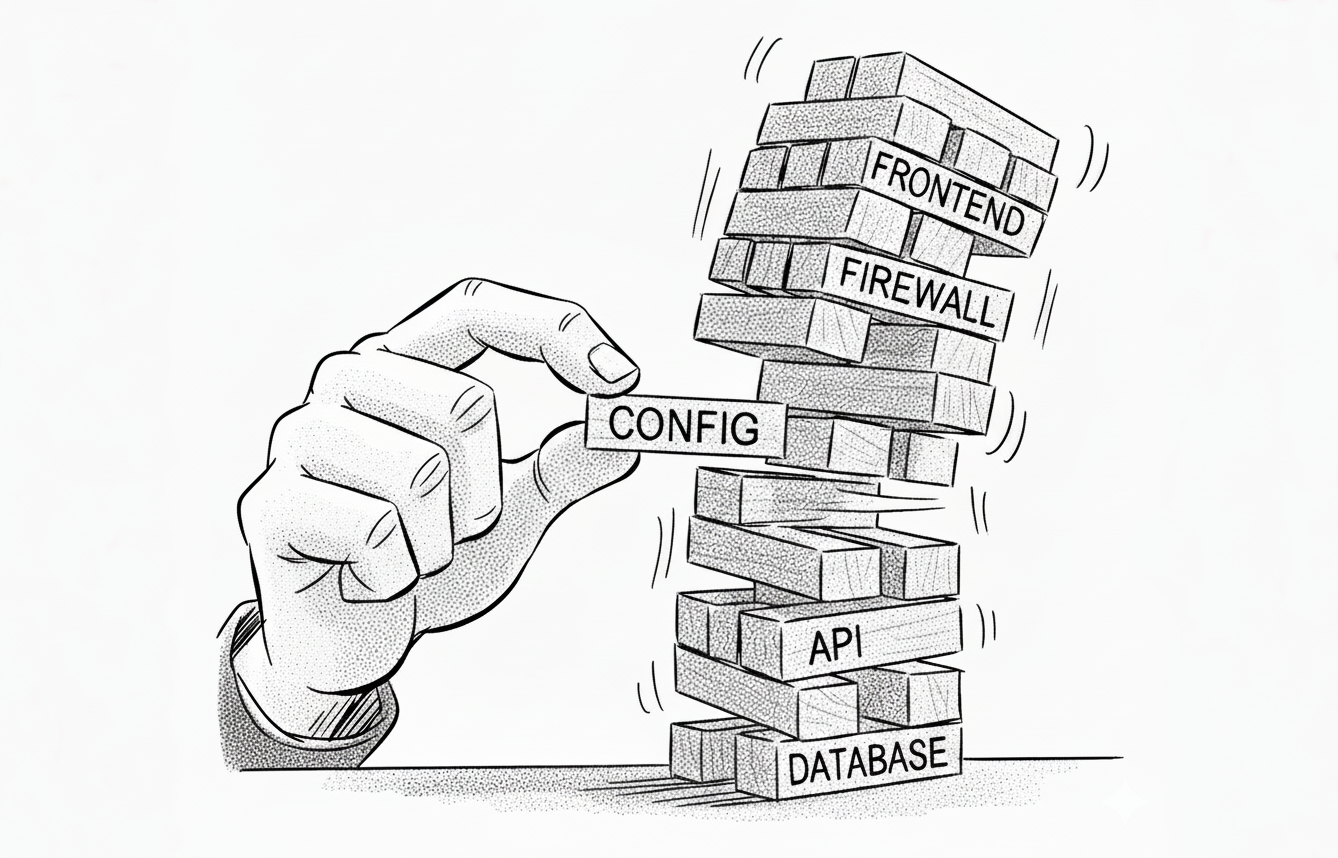

- Lack of Composability: A system’s integrity demands a holistic approach to validation. But inputs from humans and machines, arriving in countless formats, and from different departments, create a Tower of Babel of configuration. Unifying this chaos is essential, yet in practice, everything remains siloed, leading to unnecessary errors.

By focusing so heavily on connectionism, we have neglected what computers do best: painstakingly dotting i’s and crossing t’s. We have, in a sense, thrown the baby out with the bathwater.

A Classic Configuration Challenge

So, what is the solution? In our view, this failure of precision in AI is a classic configuration correctness problem.

For decades, we have improved reliability of systems by adding layers of constraints to configurations. Think of database schemas validating application data, firewall rules constraining network traffic, or admission control policies ensuring API calls are safe and compliant.

AI requires the same discipline. Luckily, we can draw from the principles learned from these decades of experience to apply the same kind of rigor to the intuitive and often-unpredictable AI outputs:

- Composable Guardrails: A robust system allows validation logic to be layered and composed from multiple sources. The constraints from a company-wide security policy, a team’s best practices, and the specific needs of a user request can all be combined to form a single, holistic set of rules.

- Shift Left and Fail Early: The ‘shift left’ principle—finding errors early to prevent costly outages—applies directly to agentic AI. Instant validation creates a rapid feedback loop that stops an agent from pursuing dead-end strategies. The result is faster iteration towards a correct solution and reduced inference cost.

- Share and Reuse: A well-defined configuration model allows proven, battle-tested data blocks and validation logic to be packaged and shared. This builds a library of best practices, ensuring consistency and preventing teams from reinventing the wheel.

We have observed a significant gap between these engineering principles and their current application in the AI ecosystem. This suggests there is considerable room for improvement across the board.

CUE: From Principle to Practice

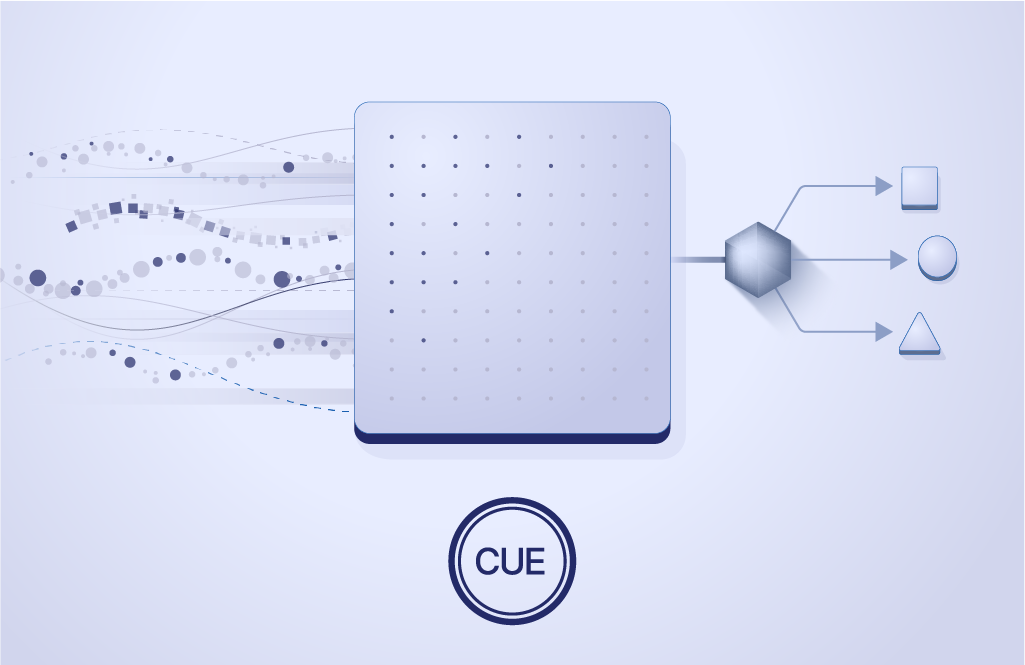

These principles are not just theoretical; they are at the core of CUE.

One of CUE’s foundational strengths is its ability to understand and unify a variety of configuration formats. This is what enables “shifting left”: it allows you to lift scattered definitions from their siloes and validate them together as a single, coherent whole. This same unifying power is what enables composable guardrails, allowing a single validation of rules layered together from across an entire organization. Finally, CUE’s native module dependency management provides a robust system for the reuse of proven logic and battle-tested building blocks.

CUE’s power to compose and validate a disparate world of configurations comes from applying a logical formalism, once used to model human language, to modern configuration challenges. It provides a unified framework for modeling data, APIs, policy, and workflows, enabling the unambiguous composition of human and machine-generated elements. Combining this logical rigor with intuitive AI gives us the guardrails to build safe systems.

CUE provides the structural backbone for reliable AI systems by enabling the definition of strict schemas and policies for tool APIs, MCP servers, structured outputs, and much more throughout the AI ecosystem. This enables tight, self-correcting loops where AI-generated output is instantly validated and refined, ensuring every action an agent takes is checked against a central policy to guarantee safe and predictable operation. See these principles in action by exploring our new guide, where we build a simple, real-life, self-correcting coding loop with CUE and Claude Code.

We are not alone in pursuing this approach. A growing movement, often called neuro-symbolic AI, is dedicated to this very synthesis of using logic to bring structure to connectionist systems. This confirms that the future of AI lies not in choosing a side, but in combining the strengths of both. CUE is a practical and powerful tool for achieving this synthesis today.

A Call for Precision

The journey of integrating AI into the systems we all rely on is just beginning. The challenge is not just to make AI more capable, but to make it provably safe and reliable. With CUE, we can move beyond mere intuition by enforcing the logical guardrails that guarantee structural correctness, resulting in more robust and predictable systems, preventing downtime, reducing costs, and increasing user trust.

Let us help you replace guesswork with confidence. Get in touch with us to discuss the unique challenges in your architecture and explore how CUE can help you to make your AI integrations more reliable.